SAJTÓKÖZLEMÉNY

10 június 2024

Introducing Apple Intelligence, the personal intelligence system that puts powerful generative models at the core of iPhone, iPad, and Mac

Setting a new standard for privacy in AI, Apple Intelligence understands personal context to deliver intelligence that is helpful and relevant

CUPERTINO, CALIFORNIA Apple today introduced Apple Intelligence, the personal intelligence system for iPhone, iPad, and Mac that combines the power of generative models with personal context to deliver intelligence that’s incredibly useful and relevant. Apple Intelligence is deeply integrated into iOS 18, iPadOS 18, and macOS Sequoia. It harnesses the power of Apple silicon to understand and create language and images, take action across apps, and draw from personal context to simplify and accelerate everyday tasks. With Private Cloud Compute, Apple sets a new standard for privacy in AI, with the ability to flex and scale computational capacity between on-device processing and larger, server-based models that run on dedicated Apple silicon servers.

“We’re thrilled to introduce a new chapter in Apple innovation. Apple Intelligence will transform what users can do with our products — and what our products can do for our users,” said Tim Cook, Apple’s CEO. “Our unique approach combines generative AI with a user’s personal context to deliver truly helpful intelligence. And it can access that information in a completely private and secure way to help users do the things that matter most to them. This is AI as only Apple can deliver it, and we can’t wait for users to experience what it can do.”

New Capabilities for Understanding and Creating Language

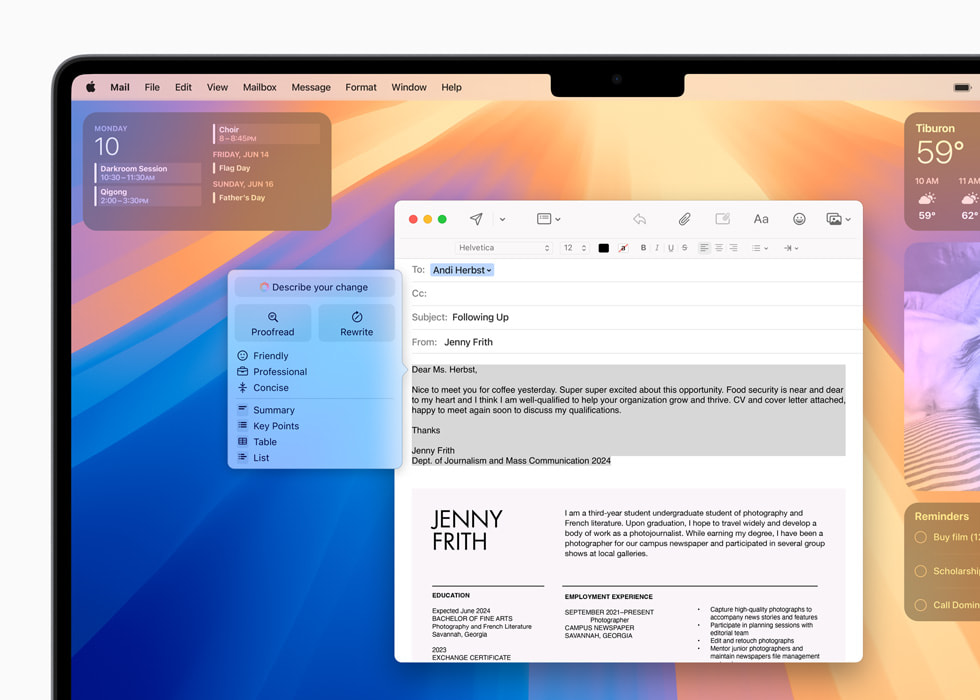

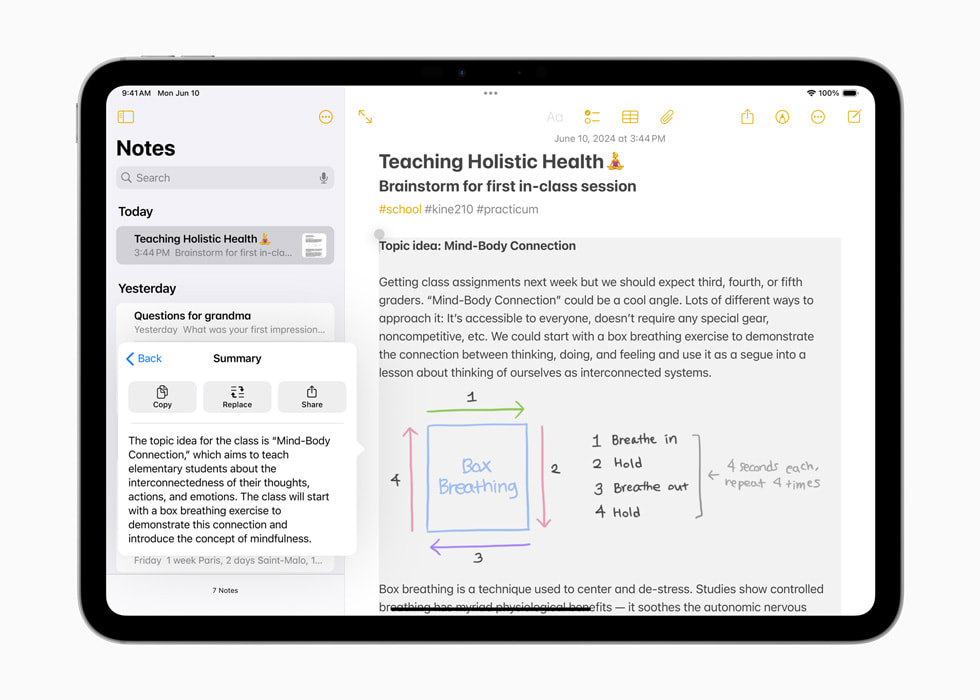

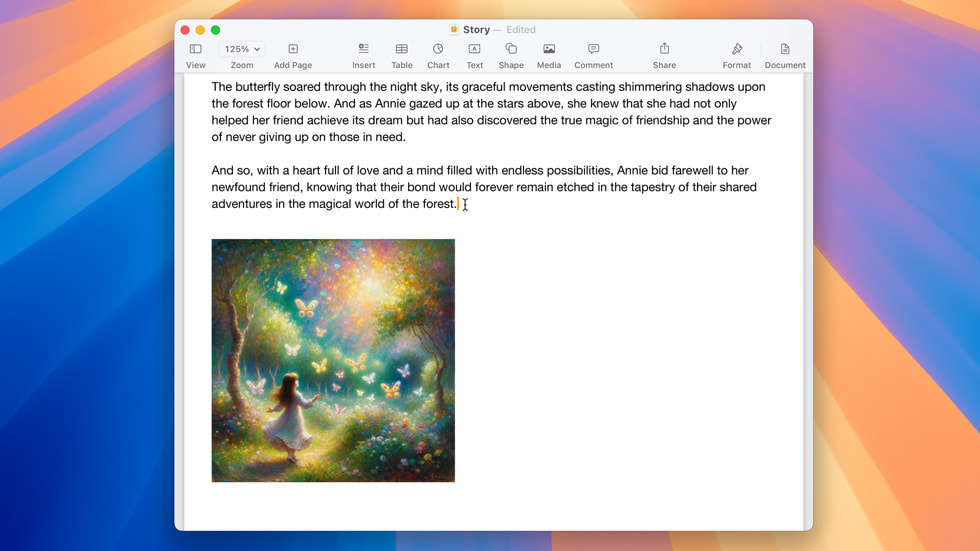

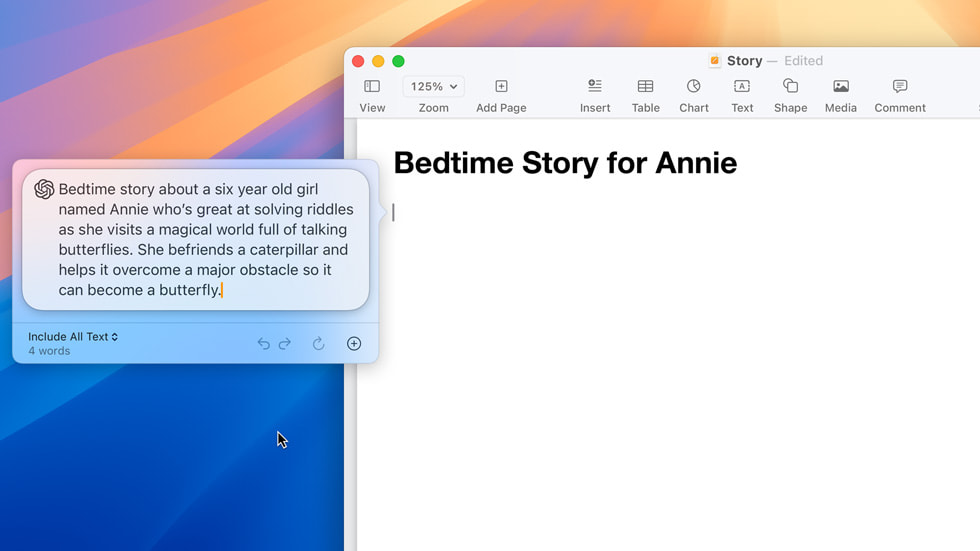

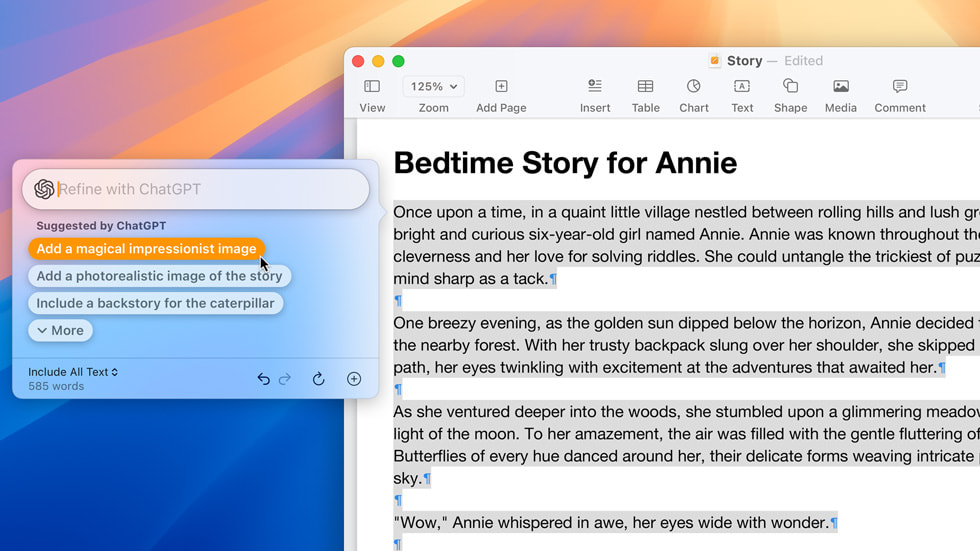

Apple Intelligence unlocks new ways for users to enhance their writing and communicate more effectively. With brand-new systemwide Writing Tools built into iOS 18, iPadOS 18, and macOS Sequoia, users can rewrite, proofread, and summarize text nearly everywhere they write, including Mail, Notes, Pages, and third-party apps.

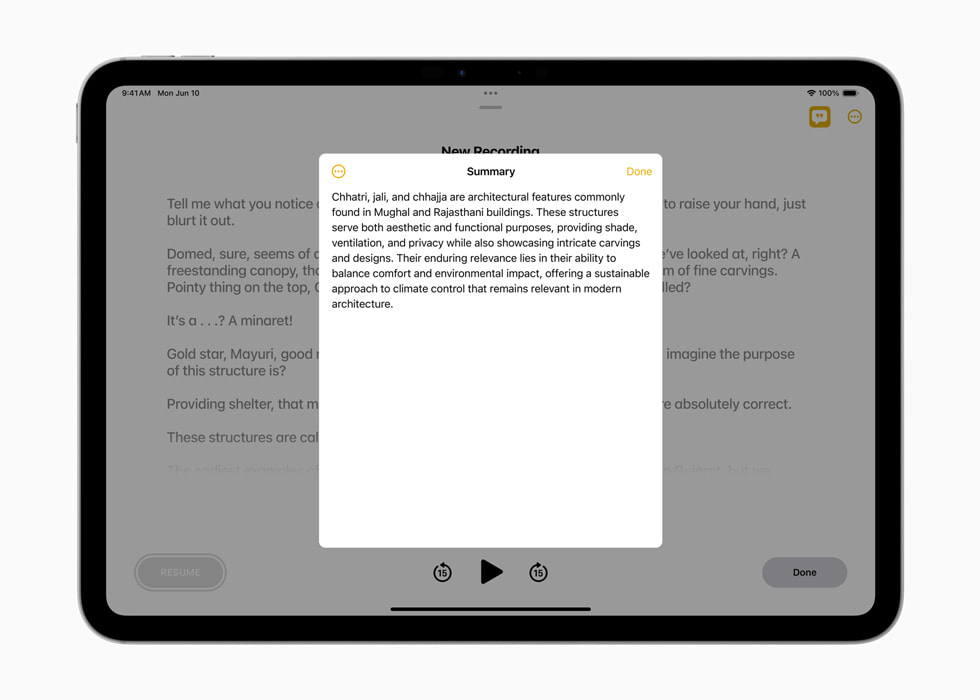

Whether tidying up class notes, ensuring a blog post reads just right, or making sure an email is perfectly crafted, Writing Tools help users feel more confident in their writing. With Rewrite, Apple Intelligence allows users to choose from different versions of what they have written, adjusting the tone to suit the audience and task at hand. From finessing a cover letter, to adding humor and creativity to a party invitation, Rewrite helps deliver the right words to meet the occasion. Proofread checks grammar, word choice, and sentence structure while also suggesting edits — along with explanations of the edits — that users can review or quickly accept. With Summarize, users can select text and have it recapped in the form of a digestible paragraph, bulleted key points, a table, or a list.

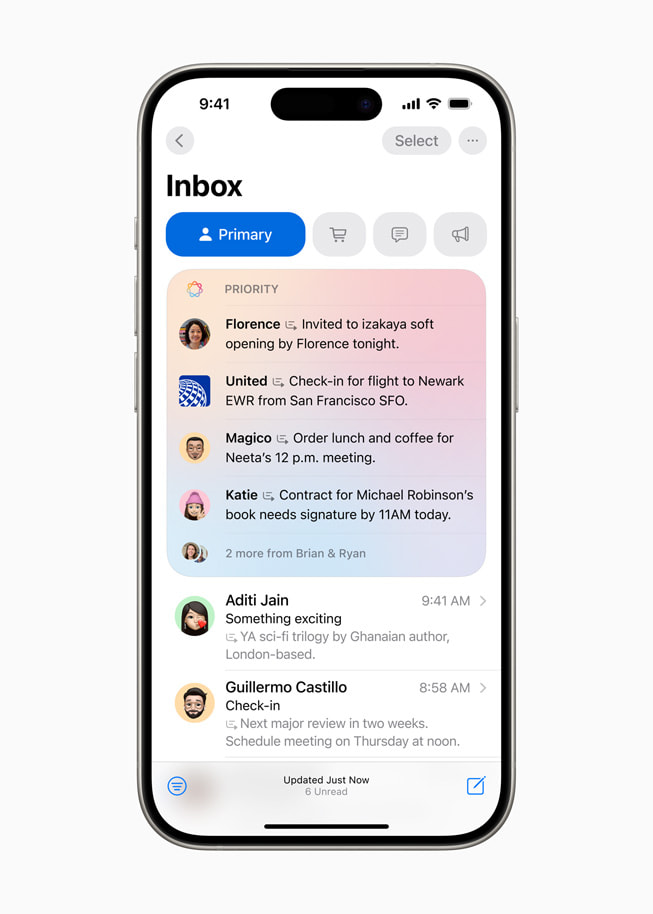

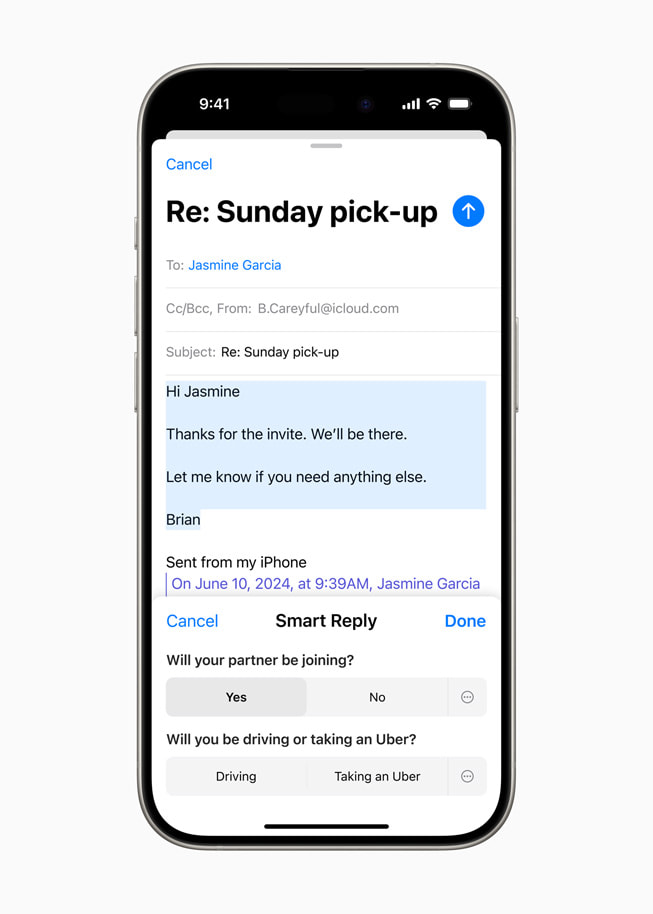

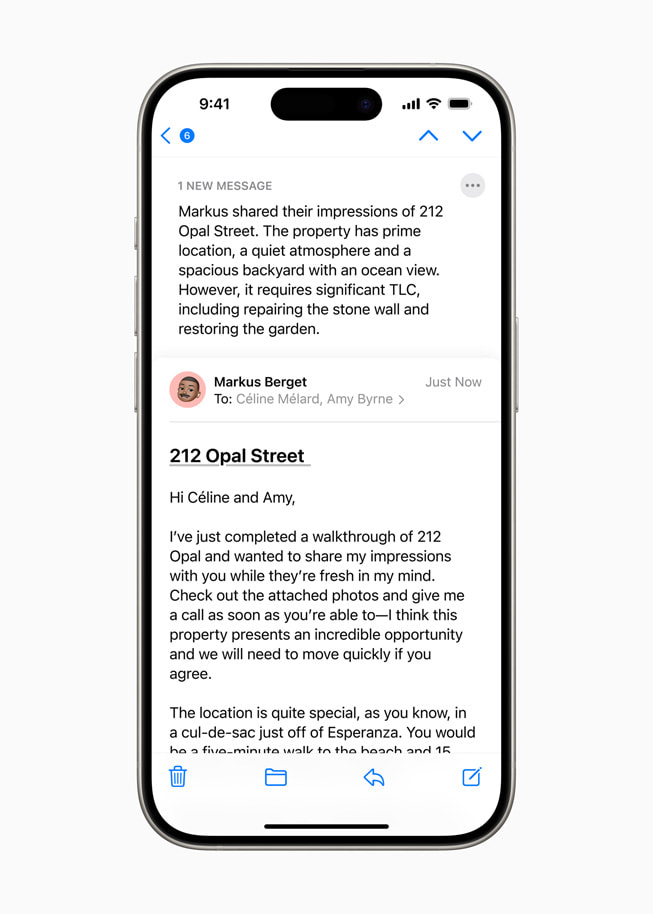

In Mail, staying on top of emails has never been easier. With Priority Messages, a new section at the top of the inbox shows the most urgent emails, like a same-day dinner invitation or boarding pass. Across a user’s inbox, instead of previewing the first few lines of each email, they can see summaries without needing to open a message. For long threads, users can view pertinent details with just a tap. Smart Reply provides suggestions for a quick response, and will identify questions in an email to ensure everything is answered.

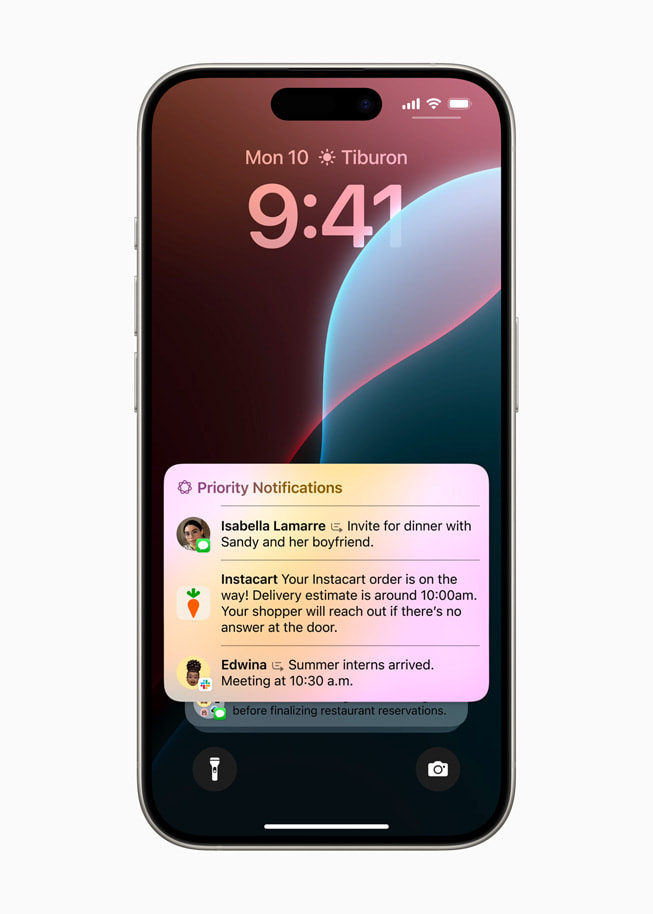

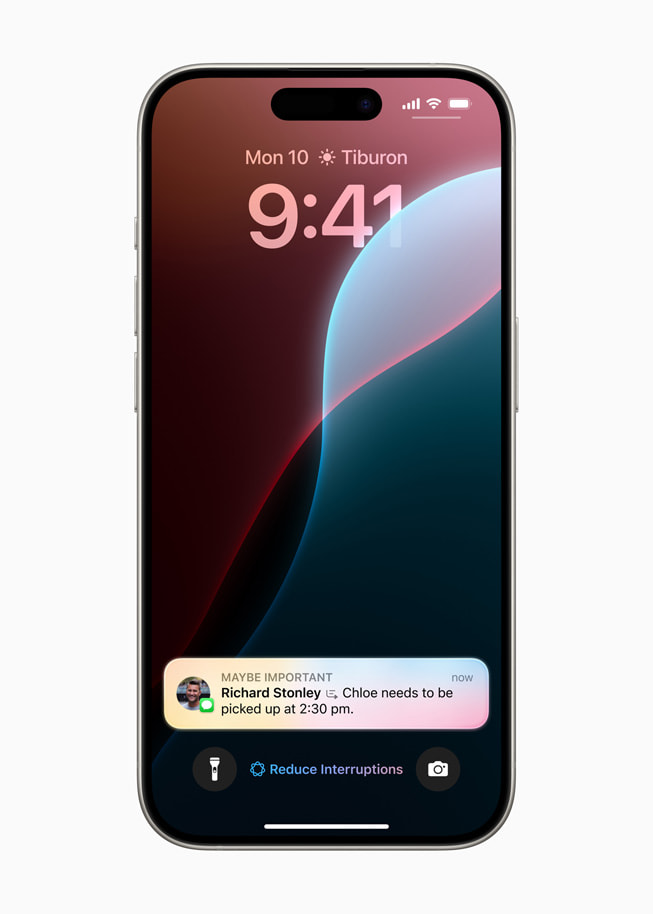

Deep understanding of language also extends to Notifications. Priority Notifications appear at the top of the stack to surface what’s most important, and summaries help users scan long or stacked notifications to show key details right on the Lock Screen, such as when a group chat is particularly active. And to help users stay present in what they’re doing, Reduce Interruptions is a new Focus that surfaces only the notifications that might need immediate attention, like a text about an early pickup from daycare.

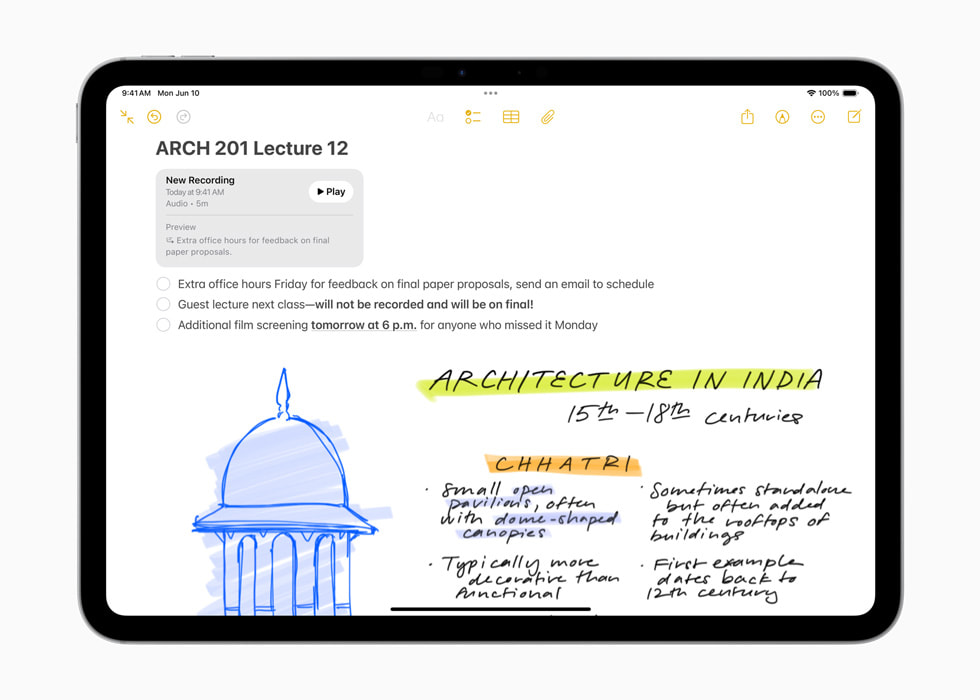

In the Notes and Phone apps, users can now record, transcribe, and summarize audio. When a recording is initiated while on a call, participants are automatically notified, and once the call ends, Apple Intelligence generates a summary to help recall key points.

Image Playground Makes Communication and Self‑Expression Even More Fun

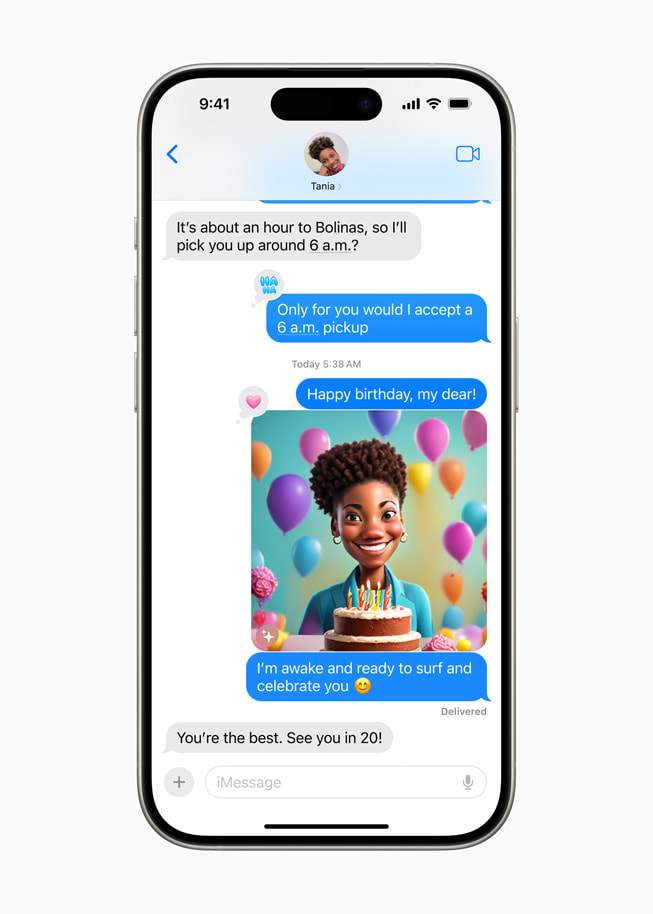

Apple Intelligence powers exciting image creation capabilities to help users communicate and express themselves in new ways. With Image Playground, users can create fun images in seconds, choosing from three styles: Animation, Illustration, or Sketch. Image Playground is easy to use and built right into apps including Messages. It’s also available in a dedicated app, perfect for experimenting with different concepts and styles. All images are created on device, giving users the freedom to experiment with as many images as they want.

With Image Playground, users can choose from a range of concepts from categories like themes, costumes, accessories, and places; type a description to define an image; choose someone from their personal photo library to include in their image; and pick their favorite style.

With the Image Playground experience in Messages, users can quickly create fun images for their friends, and even see personalized suggested concepts related to their conversations. For example, if a user is messaging a group about going hiking, they’ll see suggested concepts related to their friends, their destination, and their activity, making image creation even faster and more relevant.

In Notes, users can access Image Playground through the new Image Wand in the Apple Pencil tool palette, making notes more visually engaging. Rough sketches can be turned into delightful images, and users can even select empty space to create an image using context from the surrounding area. Image Playground is also available in apps like Keynote, Freeform, and Pages, as well as in third-party apps that adopt the new Image Playground API.

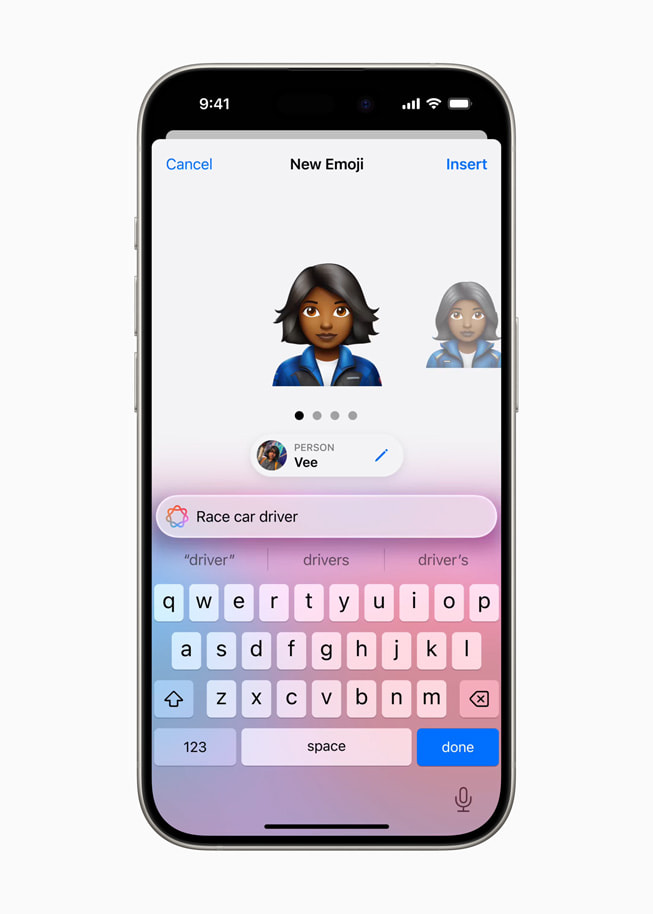

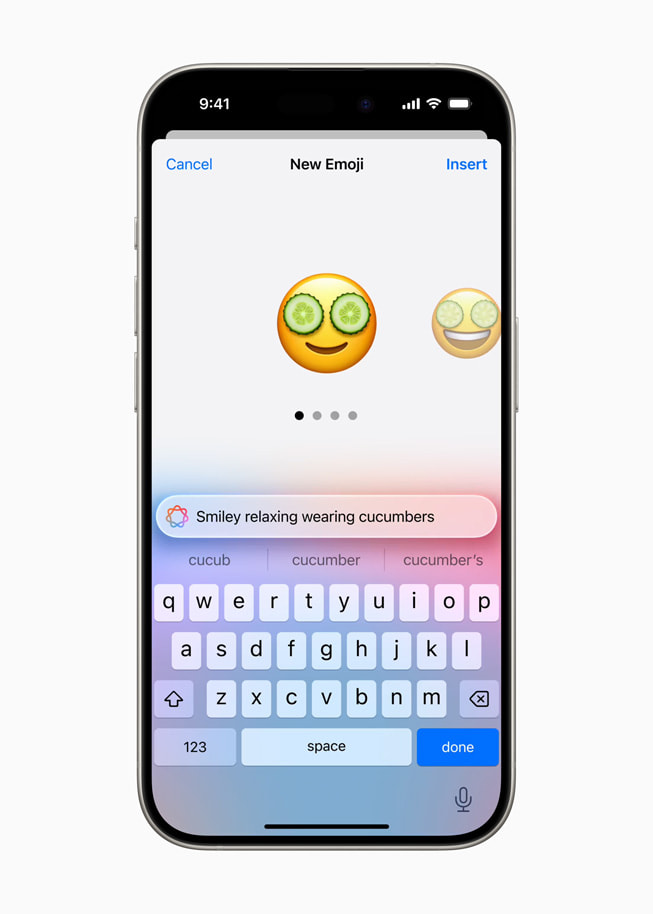

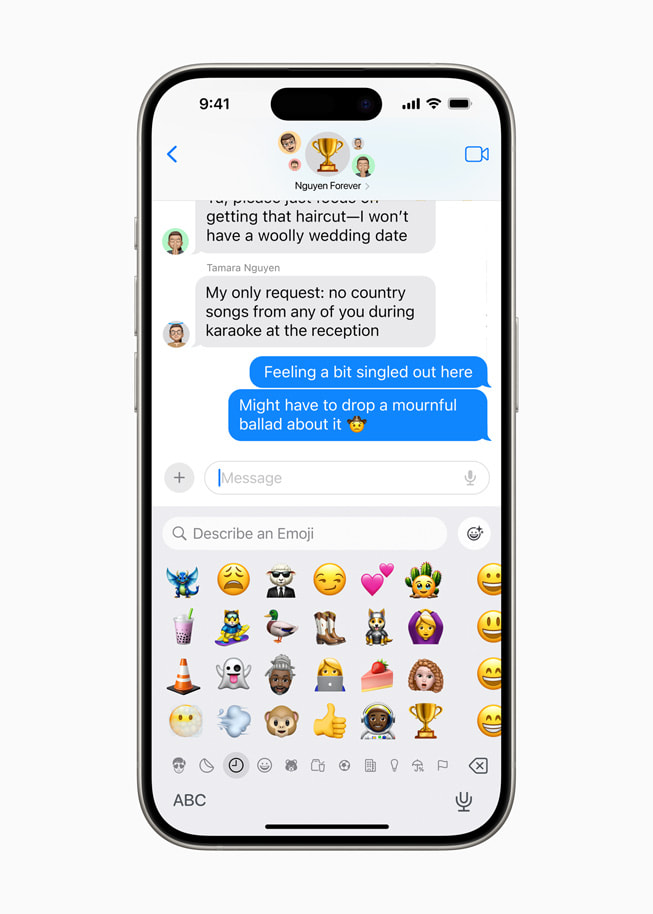

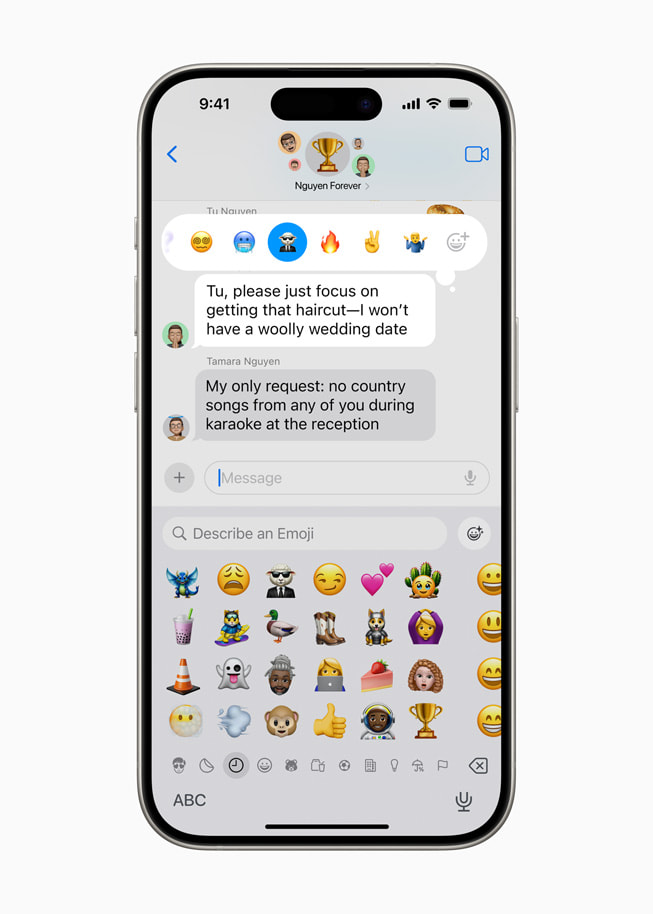

Genmoji Creation to Fit Any Moment

Taking emoji to an entirely new level, users can create an original Genmoji to express themselves. By simply typing a description, their Genmoji appears, along with additional options. Users can even create Genmoji of friends and family based on their photos. Just like emoji, Genmoji can be added inline to messages, or shared as a sticker or reaction in a Tapback.

New Features in Photos Give Users More Control

Searching for photos and videos becomes even more convenient with Apple Intelligence. Natural language can be used to search for specific photos, such as “Maya skateboarding in a tie-dye shirt,” or “Katie with stickers on her face.” Search in videos also becomes more powerful with the ability to find specific moments in clips so users can go right to the relevant segment. Additionally, the new Clean Up tool can identify and remove distracting objects in the background of a photo — without accidentally altering the subject.

With Memories, users can create the story they want to see by simply typing a description. Using language and image understanding, Apple Intelligence will pick out the best photos and videos based on the description, craft a storyline with chapters based on themes identified from the photos, and arrange them into a movie with its own narrative arc. Users will even get song suggestions to match their memory from Apple Music. As with all Apple Intelligence features, user photos and videos are kept private on device and are not shared with Apple or anyone else.

Siri Enters a New Era

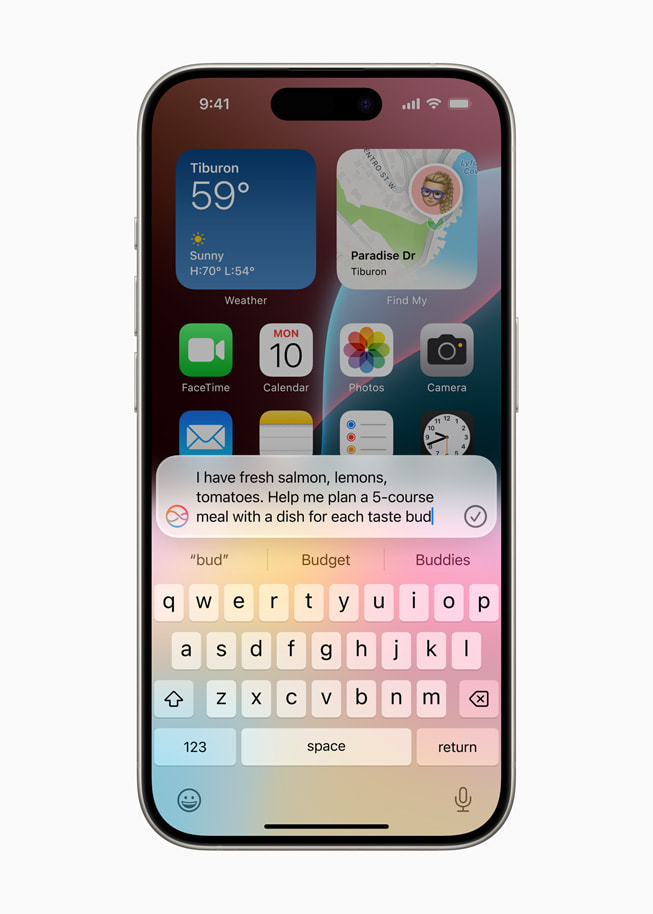

Powered by Apple Intelligence, Siri becomes more deeply integrated into the system experience. With richer language-understanding capabilities, Siri is more natural, more contextually relevant, and more personal, with the ability to simplify and accelerate everyday tasks. It can follow along if users stumble over words and maintain context from one request to the next. Additionally, users can type to Siri, and switch between text and voice to communicate with Siri in whatever way feels right for the moment. Siri also has a brand-new design with an elegant glowing light that wraps around the edge of the screen when Siri is active.

Siri can now give users device support everywhere they go, and answer thousands of questions about how to do something on iPhone, iPad, and Mac. Users can learn everything from how to schedule an email in the Mail app, to how to switch from Light to Dark Mode.

With onscreen awareness, Siri will be able to understand and take action with users’ content in more apps over time. For example, if a friend texts a user their new address in Messages, the receiver can say, “Add this address to his contact card.”

With Apple Intelligence, Siri will be able to take hundreds of new actions in and across Apple and third-party apps. For example, a user could say, “Bring up that article about cicadas from my Reading List,” or “Send the photos from the barbecue on Saturday to Malia,” and Siri will take care of it.

Siri will be able to deliver intelligence that’s tailored to the user and their on-device information. For example, a user can say, “Play that podcast that Jamie recommended,” and Siri will locate and play the episode, without the user having to remember whether it was mentioned in a text or an email. Or they could ask, “When is Mom’s flight landing?” and Siri will find the flight details and cross-reference them with real-time flight tracking to give an arrival time.

A New Standard for Privacy in AI

To be truly helpful, Apple Intelligence relies on understanding deep personal context while also protecting user privacy. A cornerstone of Apple Intelligence is on-device processing, and many of the models that power it run entirely on device. To run more complex requests that require more processing power, Private Cloud Compute extends the privacy and security of Apple devices into the cloud to unlock even more intelligence.

With Private Cloud Compute, Apple Intelligence can flex and scale its computational capacity and draw on larger, server-based models for more complex requests. These models run on servers powered by Apple silicon, providing a foundation that allows Apple to ensure that data is never retained or exposed.

Independent experts can inspect the code that runs on Apple silicon servers to verify privacy, and Private Cloud Compute cryptographically ensures that iPhone, iPad, and Mac do not talk to a server unless its software has been publicly logged for inspection. Apple Intelligence with Private Cloud Compute sets a new standard for privacy in AI, unlocking intelligence users can trust.

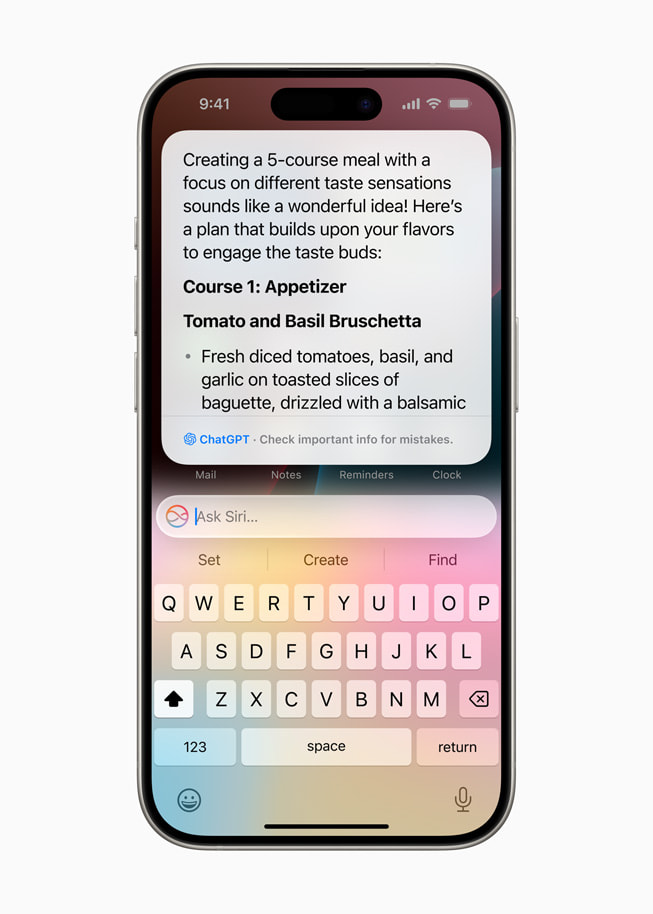

ChatGPT Gets Integrated Across Apple Platforms

Apple is integrating ChatGPT access into experiences within iOS 18, iPadOS 18, and macOS Sequoia, allowing users to access its expertise — as well as its image- and document-understanding capabilities — without needing to jump between tools.

Siri can tap into ChatGPT’s expertise when helpful. Users are asked before any questions are sent to ChatGPT, along with any documents or photos, and Siri then presents the answer directly.

Additionally, ChatGPT will be available in Apple’s systemwide Writing Tools, which help users generate content for anything they are writing about. With Compose, users can also access ChatGPT image tools to generate images in a wide variety of styles to complement what they are writing.

Privacy protections are built in for users who access ChatGPT — their IP addresses are obscured, and OpenAI won’t store requests. ChatGPT’s data-use policies apply for users who choose to connect their account.

ChatGPT will come to iOS 18, iPadOS 18, and macOS Sequoia later this year, powered by GPT-4o. Users can access it for free without creating an account, and ChatGPT subscribers can connect their accounts and access paid features right from these experiences.

Availability

Apple Intelligence is free for users, and will be available in beta as part of iOS 18, iPadOS 18, and macOS Sequoia this fall in U.S. English. Some features, software platforms, and additional languages will come over the course of the next year. Apple Intelligence will be available on iPhone 15 Pro, iPhone 15 Pro Max, and iPad and Mac with M1 and later, with Siri and device language set to U.S. English. For more information, visit apple.com/apple-intelligence.

Cikk megosztása

Media

-

A cikk szövege

-

Images in this article